Nice summary by longtime colleague and arch argument mapper Tim van Gelder. “The pivotal element here obviously is Track, i.e. measure predictive accuracy using a proper scoring rule.” If “ACERA” sounds familiar, it’s because they were part of our team when we were DAGGRE: they ran several experiments on and in parallel to the site.

Accuracy Contest: First round questions

The first round of questions has been selected for the new accuracy contest. Forecasts on these questions from November 7, 2014, through December 6, 2014, have their market scores calculated and added to a person’s “portfolio.” The best portfolios at a time shortly after March 7, 2015, will win big prizes.

Candy Guessing

Our school had a candy guessing contest for Hallowe’en. There were three Jars of Unusual Shape, and various sizes.

The spirit of Francis Galton demanded that I look at the data. Candy guessing, like measuring temperature, is a classic case where averaging multiple readings from different sensors is expected to do very well. Was the crowd wise? Yes.

- The unweighted average beat:

- 67% of guessers on Jar 1

- 78% of guessers on Jar 2

- 97% of guessers on Jar 3, and

- 97% of guessers overall

- The median beat:

- 89% of guessers on Jar 1

- 83% of guessers on Jar 2

- 78% of guessers on Jar 3, and

- 97% of guessers overall

Only one person beat the unweighted average, and two others were close. There were 36 people who guessed all three jars (and one anonymous blogger who guessed only one jar and was excluded from the analysis). The top guesser had an overall error of 9%, while the unweighted average had an overall error of 11%. Two other guessers came close, with an average error of 12%. The worst guessers had overall error rates greater than 100%, with the worst being 193% too high.

The unweighted average was never the best on a single jar — though on Jar 3 it was only off by 1. (The guesser on Jar 3 was exactly correct.)

The measure I used was the overall Average Absolute %Error. The individual rankings change slightly If instead we use Absolute Average %Error, but the main result holds.

SciCast Recruitment Announcement

SciCast is running a new special! The most accurate forecasters during the special will receive Amazon Gift Cards:

• The top 15 participants will win $2250* to spend at Amazon.com

• The other 135 of the top 150 participants will win $225 to spend at Amazon.com

US Flu Forecast: Exploring links between national and regional level seasonal characteristics

For the flu forecasting challenge (https://scicast.org/flu) participants are required to predict several flu season characteristics, at national and at regional levels (10 HHS regions). For some of the required quantities — such as peak percentage influenza-like illness (ILI), and total seasonal ILI count — one may argue that national level values have some relationship with the regional level ones. Or, in other words participants may be led to believe that national level statistics can be obtained from regional level ones.

Boo! Shadow forecasts on SciCast

In time for Hallowe’en, we’ve added Shadow Forecasts and other features to help show the awesome power of combo.

- Shadowy: when linked forecasts affect a question, we show “Shadow Forecasts” in the history and the trend graph.

- Pointy: the trend graph now shows one point per forecast instead of just the nightly snapshot.

- Chatty: Comment while you forecast. (But not while you drive.)

Read on for news about upcoming prizes.

Q&A with SciCaster Julie J.C.H. Ryan

SciCasters represent a variety of communities – academics, professionals, enthusiasts, even students. Find out how one professor built SciCast into her curriculum – and led students by example.

Meet SciCaster Julie J.C.H. Ryan, Associate Professor, Engineering Management and Systems Engineering, George Washington University.

Q: Why SciCast in the classroom?

I was intrigued by the potential and explored several alternatives with the George Mason folks. I decided to use SciCast as a practical learning exercise for a tech forecasting course that I was teaching in the spring. I provide opportunities for students to learn through guided experiences. I integrate a lot of exercises in my classes so that students are engaged in active learning through incremental explorations of the material.

Will Philae land successfully on the surface of a comet?

Background

SciCasters are following the ESA’s International Rosetta Mission and counting down the days of the much-anticipated landing of the Philae on a periodic comet known as Comet 67P/Churyumov-Gerasimenko. The latest news from ESA states that it will deploy the Philae to the comet on November 12. http://bit.ly/1DQEAcy

SciCast/ACS Webinar – Forecasting Chemistry: Predicting Tomorrow’s Cutting Edge Science, Today

Last month, SciCast joined ACS for a webinar, Forecasting Chemistry: Predicting Tomorrow’s Cutting Edge Science, Today.

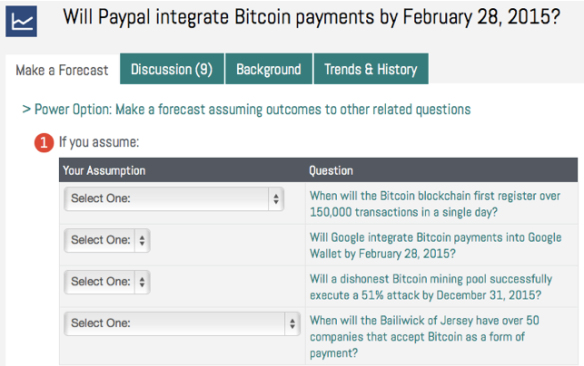

New Approach to Combo Forecasts…

Tonight’s release streamlines combo trades, adds some per-question rank feedback, prettifies resolutions, and disables recurring edits.

We’ve redone the approach to trading linked questions. Now if the question is linked to other questions, you can make any desired assumptions right from the main trade screen.